I recently attended the Evidence-Based Medicine Live19 conference at Oxford University where Professor Isabella Boutron from the Paris Descartes University presented a lecture entitled ‘Spin or Distortion of Research Results’. Simply put, research spin is ‘reporting to convince readers that the beneficial effect of the experimental treatment is greater than shown by the results’(Boutron et al., 2014). In a study of oncology trials spin was prevalent in 59% of the 92 trials where the primary outcome was negative (Vera-badillo et al., 2013). I would argue that spin also affects a large proportion of dental research papers.

To illustrate how subtle this problem can be I have selected a recent systematic review (SR) that was posted on the Dental Elf website regarding pulpotomy (Li et al., 2019). Pulpotomy is the removal of a portion of the diseased pulp, in this case from a decayed permanent tooth, with the intent of maintaining the vitality of the remaining nerve tissue by means of a therapeutic dressing. Li’s SR was comparing the effectiveness of calcium hydroxide with the newer therapeutic dressing material mineral trioxide aggregate (MTA).

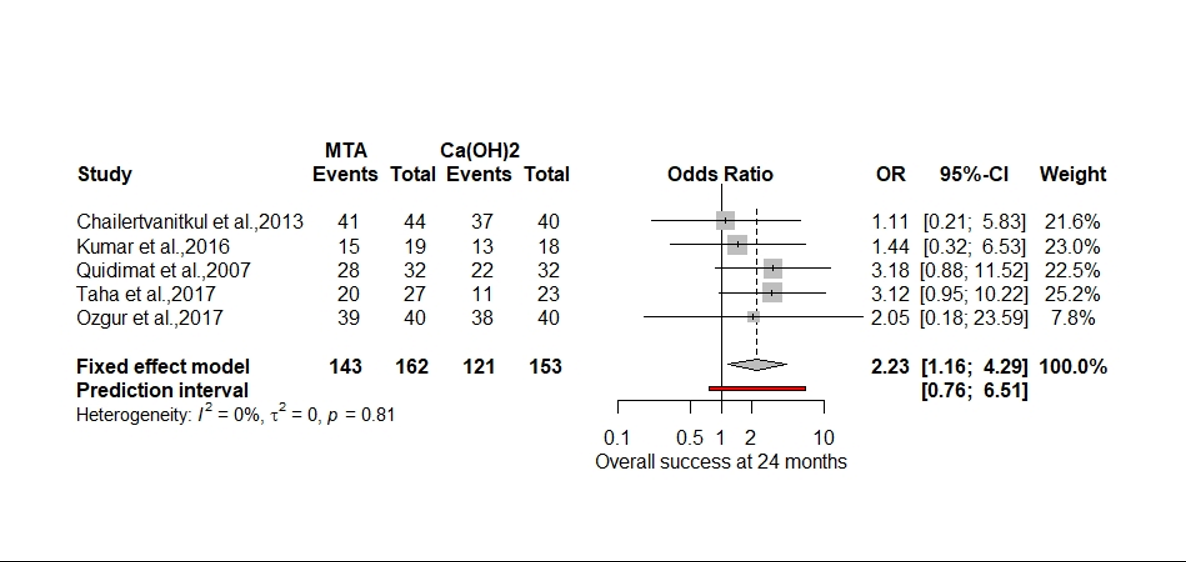

In the abstract Li states that the meta-analysis favours mineral trioxide aggregate (MTA), and in the results sections of the SR that ‘MTA had higher success rates in all trial at 12 months (odds ratio, 2.23, p= 0.02, I2=0%), finally concluding that ‘mineral trioxide aggregate appears to be the best pulpotomy medicament in carious permanent teeth with pulp exposures’. I do not agree with this assumption, and would argue that the results show substantial spin. Close appraisal of Li’s paper reveals several methodological problems that have magnified the beneficial effect of MTA.

The first problem is regarding the use of reporting guidelines, which in this case was the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement (Moher et al., 2009). The author states this was adhered to, but there is no information regarding registration of a review protocol to establish predefined primary and secondary outcomes or methods of analysis. To quote Shamseer:

‘Without review protocols, how can we be assured that decisions made during the research process aren’t arbitrary, or that the decision to include/exclude studies/data in a review aren’t made in light of knowledge about individual study findings?’(Shamseer & Moher, 2015)

In the ‘Data synthesis and statistical analysis’ section the author states that the primary and secondary outcomes for this SR were only formulated after data collection. This post hoc selection makes the data vulnerable to selection bias. Additionally, there is no predefined rationale relating to the choice of an appropriate summary measure or method of synthesising the data.

The second problem relates to the post hoc choice of summary measure, in this case ‘odds ratio’ and the use of a fixed effects model in the meta-analysis (Figure.1).

Figure 1. Forest plot of 12-month clinical success (original).

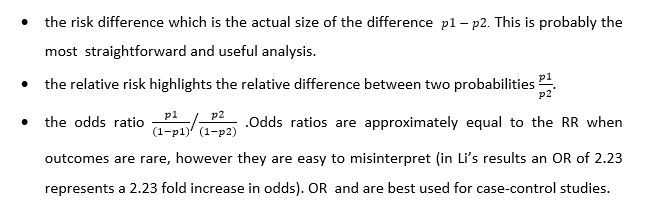

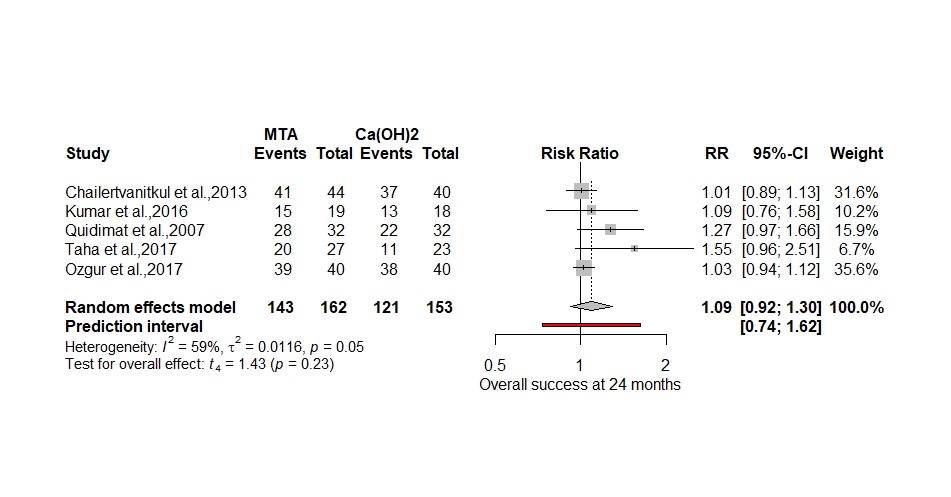

Of all the options available to analyse the 5 randomised control trails odds ratio and a fixed effects model produced the largest significant effect size (OR 2.23 p = 0.02). There was no explanation as to why odds ratio was selected over relative risk (RR), risk difference (RD), or arcsine difference (ASD) if the values were close to 0 or 1. Since the data for the SR is dichotomous the three most common effect measurements are:

The authors specifically chose a fixed-effects model for meta-analysis based on the small number of studies. There are two problems with this, firstly there is too much variability between the 5 studies in terms of methodology and patient factors, such as age (in 4 studies the average age is approximately 8 years and in one study its 30 years). Secondly we don’t need to used a fixed effect model since there are 5 studies, therefore we can use a random effects model using a Hartung-Knapp adjustment specifically for handling the small number of studies (Röver, Knapp & Friede, 2015; Guolo & Varin, 2017).

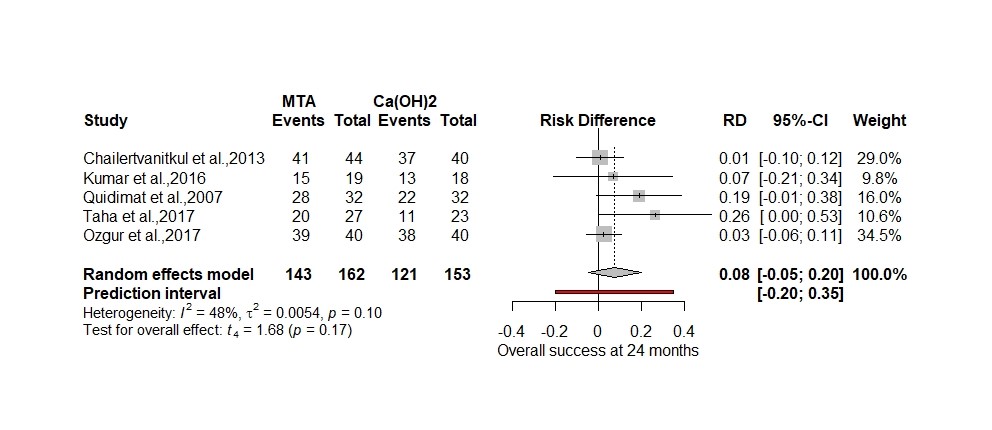

Below I have reanalysed the original data using a more plausible random effects model (Hartung-Knapp) and RR to show the relative difference in treatments plus RD to highlight the actual difference (Figure 2. and 3.) using the ‘metabin’ package in R (Schwarzer, 2007).

Figure 2. 12-month clinical success using Hartung-Knapp adjustment for random effects model and relative risk

Figure 3. 12-month clinical success using Hartung-Knapp adjustment for random effects model and risk difference

Both analyses now show a small effect size ( 8% to 9%) that slightly favours the MTA but is non-significant as opposed to a 2.23-fold increase in odds. In the pulpotomy review the OR magnifies the effect size by 51% using the formula (OR-RR)/OR×100. In a paper by Holcomb reviewing 151 studies using odds ratios 26% had interpreted the odds ratio as a risk ratio (Holcomb et al., 2001).

There are a couple of further observations to note. Regarding the 5 studies, even combined one would need 199 individuals in each arm of the study for it to be sufficiently powered ( ∝ error prob = 0.05, 1-β error prob = 0.8) putting the authors results into question about significance.

I have included a prediction interval in both my forest plots to signify the range of possible true values one could expect in a future RCT’s, which is more useful to know in clinical practice than the confidence interval (IntHout et al., 2016). Using the RD meta-analysis, a future RCT could produce a result that favours calcium hydroxide by 20% or MTA by 35% which is quite a wide range of uncertainty.

One of Li’s primary outcomes was cost effectiveness and the paper concluded there was insufficient data to determine a result, it also mentions the high cost and technique sensitivity of MTA compared to the calcium hydroxide. I would argue that since there appears to be no significant difference between outcomes, we could conclude that on the evidence available calcium hydroxide must be more cost effective.

In conclusion researchers, reviewers and editors need to be aware of the harm spin can do. Many clinicians are not able to interrogate the main body of a research paper for detail as it is hidden behind a paywall and they rely heavily on the abstract for information (Boutron et al., 2014). Registration of a research protocol prespecifying appropriate outcome and methodology will help prevent post-hoc changes to the outcomes and analysis. I would urge researches to limit the use of odds ratios to case-control studies and use relative risk or risk difference as they are easier to interpret. For the meta-analysis avoid using a fixed effects model if the studies don’t share a common true effect and include a prediction interval to explore possible future outcomes.

References

Boutron, I., Altman, D.G., Hopewell, S., Vera-Badillo, F., et al. (2014) Impact of spin in the abstracts of articles reporting results of randomized controlled trials in the field of cancer: The SPIIN randomized controlled trial. Journal of Clinical Oncology. [Online] 32 (36), 4120–4126. Available from: doi:10.1200/JCO.2014.56.7503.

Guolo, A. & Varin, C. (2017) Random-effects meta-analysis: The number of studies matters. Statistical Methods in Medical Research. [Online] 26 (3), 1500–1518. Available from: doi:10.1177/0962280215583568.

Holcomb, W.L., Chaiworapongsa, T., Luke, D.A. & Burgdorf, K.D. (2001) An Odd Measure of Risk. Obstetrics & Gynecology. [Online] 98 (4), 685–688. Available from: doi:10.1097/00006250-200110000-00028.

IntHout, J., Ioannidis, J.P.A., Rovers, M.M. & Goeman, J.J. (2016) Plea for routinely presenting prediction intervals in meta-analysis. British Medical Journal Open. [Online] 6 (7), e010247. Available from: doi:10.1136/bmjopen-2015-010247.

Li, Y., Sui, B., Dahl, C., Bergeron, B., et al. (2019) Pulpotomy for carious pulp exposures in permanent teeth: A systematic review and meta-analysis. Journal of Dentistry. [Online] 84 (January), 1–8. Available from: doi:10.1016/j.jdent.2019.03.010.

Moher, D., Liberati, A., Tetzlaff, J. & Altman, D.G. (2009) Systematic Reviews and Meta-Analyses: The PRISMA Statement. Annulas of Internal Medicine. [Online] 151 (4), 264–269. Available from: doi:10.1371/journal.pmed1000097.

Röver, C., Knapp, G. & Friede, T. (2015) Hartung-Knapp-Sidik-Jonkman approach and its modification for random-effects meta-analysis with few studies. BMC Medical Research Methodology. [Online] 15 (1), 1–8. Available from: doi:10.1186/s12874-015-0091-1.

Schwarzer, G. (2007) meta: An R package for meta-analysis. [Online]. 2007. R News. Available from: https://cran.r-project.org/doc/Rnews/Rnews_2007-3.pdf%0A.

Shamseer, L. & Moher, D. (2015) Planning a systematic review? Think protocols. [Online]. 2015. Research in progress blog. Available from: http://blogs.biomedcentral.com/bmcblog/2015/01/05/planning-a-systematic-review-think-protocols/.

Vera-badillo, F.E., Shapiro, R., Ocana, A., Amir, E., et al. (2013) Bias in reporting of end points of efficacy and toxicity in randomized, clinical trials for women with breast cancer. Annals of Oncology. [Online] 24 (5), 1238–1244. Available from: doi:10.1093/annonc/mds636.

Mark-Steven Howe , University of Oxford